The octopus and the rake

Being able to effectively command an army of agents might become an essential skill

Are you an octopus?

A while ago, Scott Werner published a blog post that has lived rent free in my head ever since. In the post, he describes how his work as a software engineer has been transformed by AI agents like Claude Code. Instead of focussing on a single problem at a time, he has started switching from session to session, tackling multiple projects at once. This has become possible due to the AI Agents doing the implementation while he is steering the ship. He compares this to an octopus that has distributed neurons in its arms, which allow them to act semi-independently. I have shared this post with my colleagues and it has caused a noticeable ripple among us. I suspect this is because the article accurately articulates what many of us have been feeling for quite some time: We feel like we can get much more work done since the advent of LLMs and AI Agents.

Now, I understand that there is still some skepticism around AI, especially from people with deep technical expertise. They might have experienced some of the shortcomings of current AI systems and have decided that it's not as helpful as many are proclaiming. But I think that they might just be too focused on their very own specific use case and haven't taken a step back to see the bigger picture: It's not so much about a single task but more about the fact that you can now do so much more in parallel if (and that is still a big if) you are able to embrace becoming an octopus.

But how do you become an octopus? And what does it mean to effectively manage AI Agents without just generating a bunch of AI slop? One thing is certain: AI won't magically make you an expert in every field but it will raise the baseline for so many tasks if used correctly. In Scott's case, the octopus analogy is used for AI assisted coding where he can tackle multiple coding-related tasks in parallel. But I think that zooming out and looking at multiple areas of application is where this analogy really shines. I, for one, have been juggling so much more ever since I started leaning into AI systems. And I am convinced that it has unlocked a vast array of new capabilities for me. You can check out this post for a short summary of what I mean.

Raking in the benefits

When talking about skills there is the concept of T-shaped skills that describe generalist-specialists: They have a broad base knowledge (represented by the horizontal line of the T) but they also have deep expertise in one specific area. This has long been the archetype for high-ranking roles in academia and industry. The assumption is that people with this kind of skill set can quickly understand the essentials of many other fields while also being able to contribute to state-of-the-art work in their own field of expertise. But what if instead of a T, we could have multiple areas of deeper expertise? This idea is not new but it was brought to my attention again by one rather humorous tweet. I subsequently coined the term "rakeist" but I don't have high hopes of it catching on due to it's rather small Levenshtein distance to a variant that replaces the k with a p. But jokes aside: What if we would constantly expand our horizon by adding more and more areas of expertise to our skill set and end up with something that looks like a rake?

People have talked about livelong learning for ages, but the reality for a lot of people was that with a job and a family, there was only very little time left to pick up new skills. It's of course not impossible and in some professions like software engineering it was basically a necessity to constantly learn new ways of doing things. There is a saying in German that roughly translates to "I don't have any time to sharpen my axe, I've got to chop down trees!". This and other phrases are common in business environments because they humorously describe a common reality: When you have only so much time to do the thing that you are being paid to do, you usually spend most of it doing the thing as opposed to doing things that might actually make things easier in the future. But what if sharpening the axe has actually become much easier over the last couple of years? What if you had an army of (somewhat lopsided) experts that never get tired and are available 24/7. What if they could not only help you doing things but help you expanding your own skills? This is exactly what AI can be.

Stepping out of the comfort zone

In one of his recent videos on the topic of vibecoding, Theo said something that caught me off guard: He said that he believes you should not lean in to agentic coding if you couldn't do the implementation yourself (if necessary). I myself have been tackling many things thanks to agentic coding tools (opencode btw) and some are beyond what I could easily implement myself (Note: you can replace the word "agentic" with LLMs + tools in a while loop if you want). I believe I could learn to do most of it but it would probably take me an insurmountable amount of time, if I had to learn everything the old fashioned way. According to Theo, if you are not able to clean up the mess that an AI agent produces if not held tight enough on the leash, you should not even try to wield this mighty sword. He does, however, strongly encourage people to use AI for learning by asking questions outside of your IDE and then go try to implement it yourself. This is very similar to what DHH has been saying for quite some time.

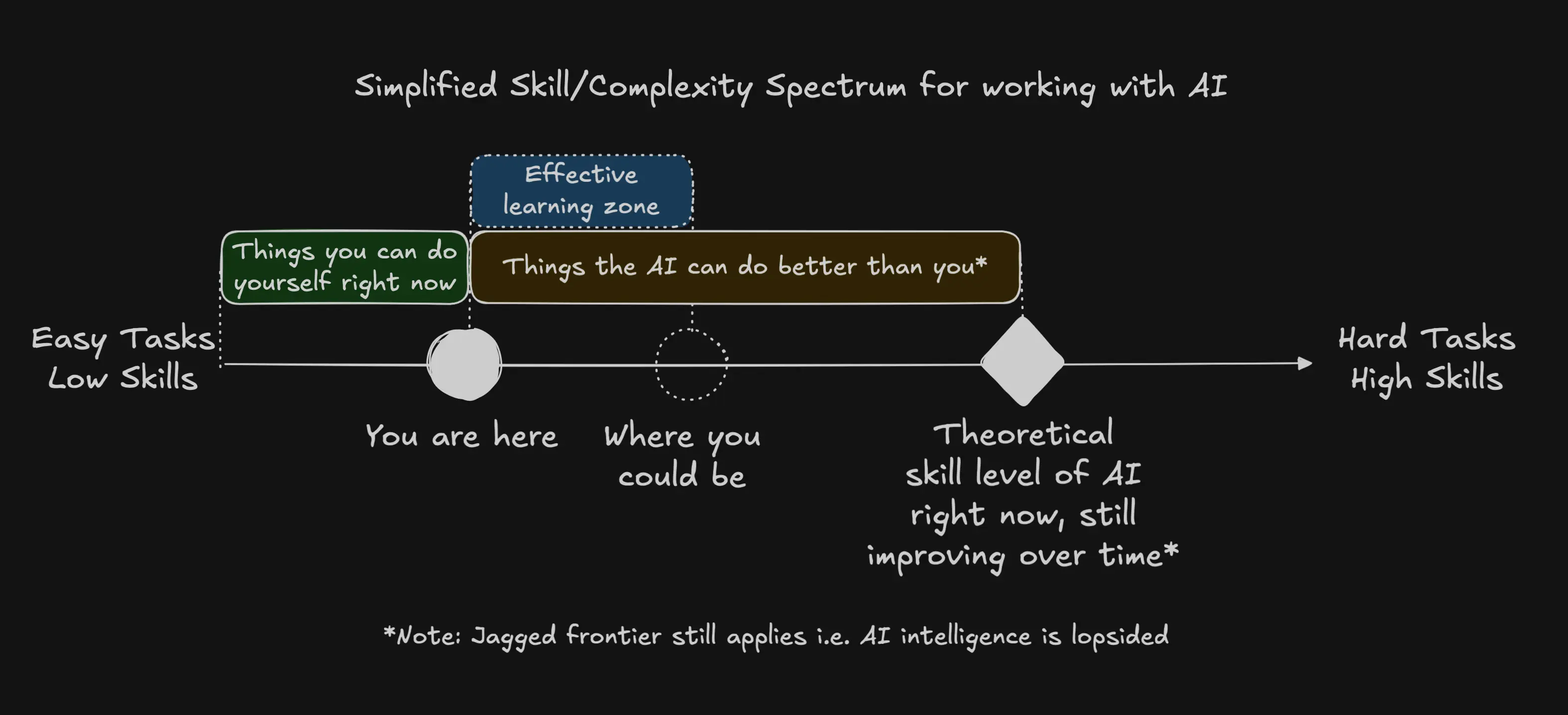

And after letting this sink in I have to say that I don't fully agree with this position. I firmly believe that trying to do stuff that is a bit above your skill level can actually be the best way of learning. I am not saying you should go and try to do something that is far outside your comfort zone, because the moment something goes wrong and even the AI can't fix it anymore, you are indeed screwed. But just beyond the edge of your current skill level, there is what I would call the effective learning zone.

I picked up this idea from a nice article on a framework for working with AI by Massimo Re Ferrè, which I simplified in the above image. The learning zone is above your current skill level but you are still able to understand everything well enough. You also can intuitively judge whether a result is good or not. This is the area that I like to live in personally. Because doing something that you don't yet fully know how to do but then see it succeed is highly motivating. And at the end of the day, what truly counts is the result you are able to achieve. If using AI is helping you create something that is of use to you and that works, it might not even matter if you fully understand everything yet. But one thing is clear to me: If you don't have a strong desire to learn as much as possible and expand your skill set in the process, you're gonna have a hard time. There has never been a better time to learn new things than now. Just don't forget to let your ideas marinate from time to time.

Growing tentacles

One important detail of Scott's post is the aspect of collaboration. When considering the octopus, it seems that traditional team roles and communication patterns could actually be counterproductive. Because when working with Agents effectively, you yourself are able to understand what is happening all the time. But good luck explaining everything to someone else. Imagine having to sift though someone else's chat histories to piece everything that they have been working on together. At the same time, an octopus can only be really effective when they are given enough freedom to tackle many problems that were traditionally split among different roles. I don't have an answer yet if this conundrum can be easily alleviated for existing organizations with traditional hierarchies and roles. One thing I do know is that you should not wait around for someone else to figure it out. Go grow some tentacles and let's do things!